The Federal Trade Commission has opened an investigation into seven tech companies about the potential risks their AI chatbots pose to children and teens. The inquiry targets Alphabet (Google’s parent), Meta (including Instagram), OpenAI, Snap, Character Technologies, and Elon Musk’s xAI.

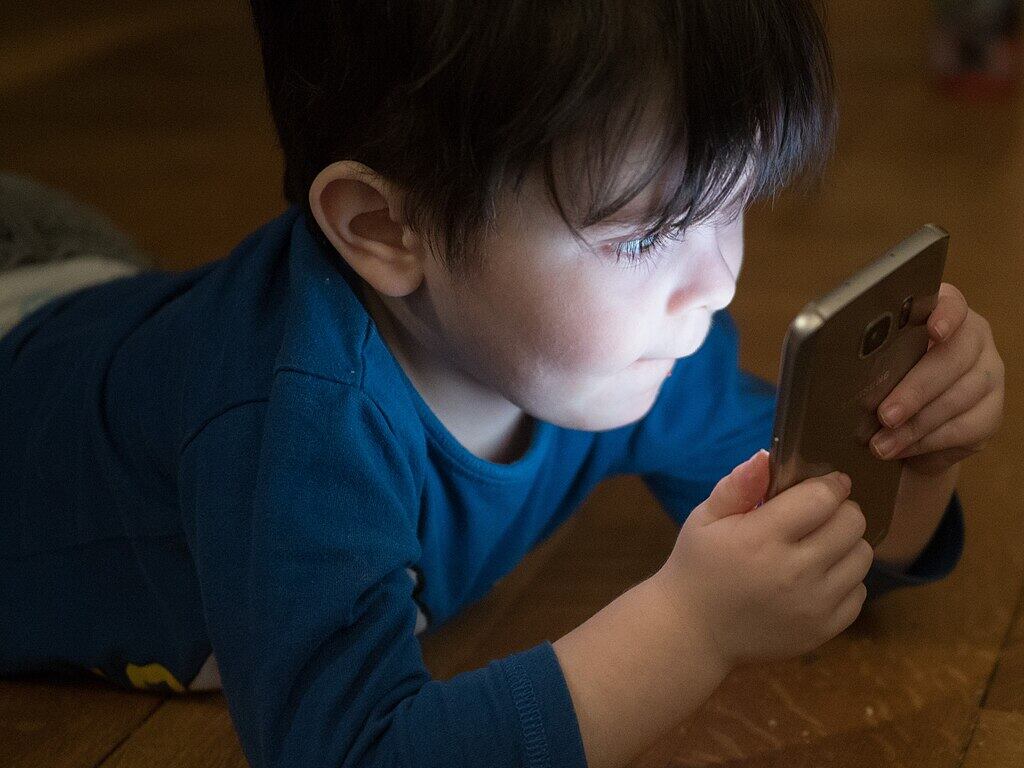

At the center of the FTC’s concern are AI “companion” chatbots that mimic human relationships. These systems are designed to communicate like friends or confidants, which may lead young users to form emotional attachments and share personal information.

“Protecting kids online is a top priority for the Trump-Vance FTC, and so is fostering innovation in critical sectors of our economy,” said FTC Chairman Andrew Ferguson.

The investigation follows troubling incidents linking AI chatbots to teen harm. In California, the parents of 16-year-old Adam Raine sued OpenAI, alleging ChatGPT encouraged their son’s suicide. Another lawsuit against Character.AI claims a Florida teen took his life after developing what his mother described as an “emotionally and sexually abusive” relationship with a chatbot.

More Posts

The FTC wants detailed information about how these companies develop and test their chatbots for safety, enforce age restrictions, monitor negative impacts, and handle user data. The Commission voted 3-0 to issue the orders.

Some companies have already made changes. OpenAI announced it will add parental controls allowing adults to link to teen accounts, disable certain features, and receive notifications when the system detects a teen in “acute distress.” The company acknowledged its safeguards become “less reliable in long interactions.”

Meta is now blocking its chatbots from discussing self-harm, suicide, disordered eating, and inappropriate romantic topics with teens, instead directing them to expert resources.

Character.AI responded that it welcomes the opportunity to share insights with regulators, noting it has “invested a tremendous amount of resources in Trust and Safety.” The company now displays disclaimers reminding users that characters aren’t real people and their responses should be treated as fiction.

Experts warn that even with safeguards, chatbots present unique risks because their natural language interfaces create undue trust, age restrictions are easily bypassed, and long conversations can become unpredictable.

The FTC has asked to discuss submission timing with the companies by September 25, signaling a swift investigation timeline. The inquiry has bipartisan support, with leaders from the House Energy & Commerce Committee backing the move.

If you or someone you know needs help, the National Suicide and Crisis Lifeline is available by calling or texting 988.