Google DeepMind released Perch 2.0 on August 7, 2025, expanding its AI model’s capabilities beyond birds to include mammals, amphibians and human-made noise. The updated version better adapts to underwater environments like coral reefs and can disentangle complex acoustic scenes at much larger scale.

“What’s not to love about birds? They do all of the work in the forest: pollination, seed dispersal,” says Amanda Navine, Bioacoustic Researcher at LOHE Lab in Hawaii, who works with the technology to track endangered honeycreepers.

Perch 2.0 was trained on approximately 1.54 million source recordings drawn from public sources like Xeno-Canto, iNaturalist, Tierstimmenarchiv, and FSD50K, giving it a far larger, multi-taxa training set than earlier Perch releases.

On the BirdSet benchmark, Perch 2.0 attains AUROC scores of approximately 0.907-0.908; it also reports state-of-the-art results across the BEANS tasks (which use accuracy/mAP metrics).

“Birds are fantastic. We learn a lot about what’s going on in a habitat just by listening to birds,” explains Tom Denton, Research Scientist at Google DeepMind in the project video.

Hawaii faces a serious conservation challenge with its native birds. “We are known as the extinction capital of the world. Almost three-fourths of our native species have been lost,” Navine states. (For context: conservation counts vary by taxon — e.g., American Bird Conservancy records approximately 95 of 142 endemic Hawaiian bird species extinct, about two-thirds or 67%.) The main threat comes from mosquitoes carrying avian malaria, which have historically devastated bird populations.

Denton elaborates: “Most of the birds that remain are all at high elevation, above where the mosquitoes want to live. However, with global warming, temperatures are rising and that mosquito line is increasing as well.”

Traditional audio analysis methods can’t keep pace with the crisis. “You could manually analyze all of this data, but if it takes you five years to get through it, by that time, your bird may have gone extinct in that area already,” Navine explains.

Similar Posts

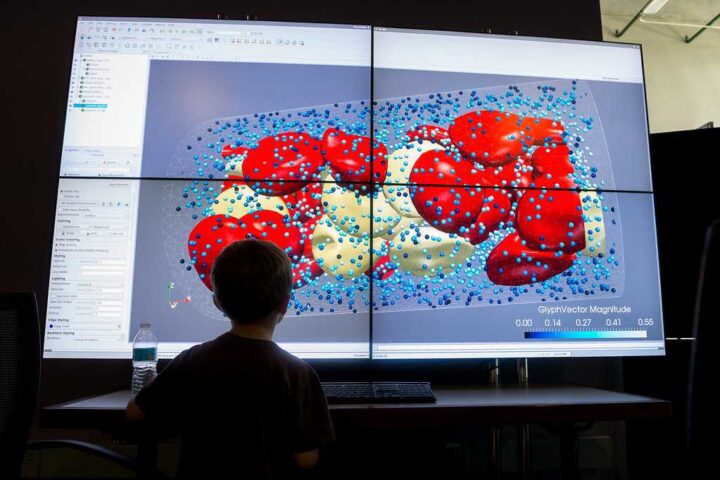

The new model enables what researchers call “Perch search” – scanning through hours of forest recordings to identify specific species rapidly. This helps monitor both adult and baby bird activity in areas treated for mosquitoes.

Perch 2.0 employs a two-phase training approach with prototype learning classification followed by self-distillation. The team used Vizier for hyperparameter search with up to 300,000-400,000 training steps.

The original Perch, launched in 2023, has been downloaded over 250,000 times according to DeepMind. Its vector search library now operates within Cornell’s BirdNET Analyzer. In Australia, the technology helped BirdLife Australia and the Australian Acoustic Observatory identify unique species, including a previously unknown population of the endangered Plains Wanderer.

“This is an incredible discovery – acoustic monitoring like this will help shape the future of many endangered bird species,” says Paul Roe, Dean Research at James Cook University, Australia.

The model includes tools for “agile modeling” – building new sound classifiers from few examples through a combination of vector search and active learning. This method works for both forest and reef environments, creating quality classifiers in short timeframes (often under an hour in demonstration settings).

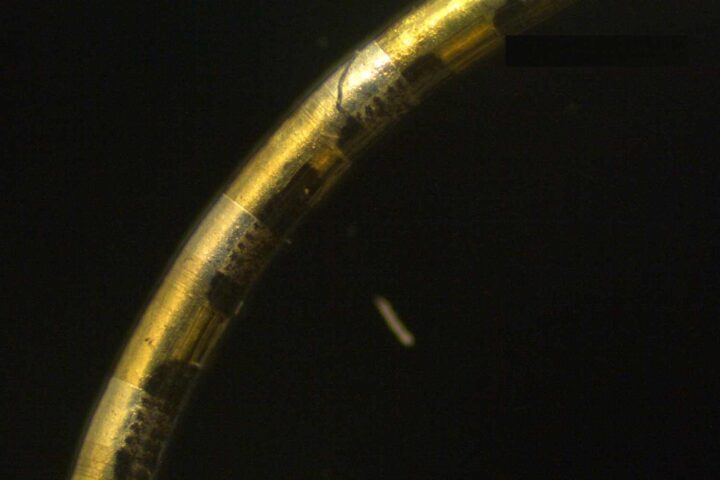

Perch 2.0 does have limitations. The arXiv paper notes the model had almost no marine hydrophone training data, with only a few dozen cetacean recordings that include both hydrophone recordings and above-water recordings of marine mammals, though performance on marine benchmarks remains strong. Challenges with label quality and geographic representation in training data also exist.

Conservationists can access both the standard Perch model and SurfPerch (for underwater sounds) through Kaggle, with code resources available on GitHub’s google-research/perch repository.

Perch 2.0 expands its training beyond birds to include mammals, amphibians and anthropogenic sounds. The model is described in an arXiv preprint and is available on Kaggle and GitHub. Current applications include integration with BirdNET Analyzer and the Australian Acoustic Observatory, with field examples such as Hawaiian honeycreeper monitoring.